This month, I wish to do a brief overview of the pros and cons of using COMPRESS YES at the tablespace and index level.

Starting with Tablespaces

Compression came in with DB2 V3 and required a special processor for the CMPSC instruction to work at any really useful speed. Nothing changed for a loooooong time until DB2 V9 when XML compression was added. Then, in DB2 10, „partial compression“ came. This includes building the dictionary after 1.2MB data has been inserted by the LOAD utility and afterwards, in Db2 12 FL504, we got a brand-new compression routine, only for UTS, called „Huffman“ which also renamed the „old“ routine to „fixed length“. Then, in FL509, Huffman got fully externalized into the Db2 Catalog. Full utility support was also introduced. All of these methods require the creation, and maintenance, of a dictionary, where Db2 can look up the byte codes/words that actually do the compression.

A new ZPARM as well

Naturally, to specify the default compression routine in use, a new ZPARM appeared: „TS_COMPRESSION_TYPE“ – If set to HUFFMAN, then you could simply issue an ALTER like: ALTER TABLESPACE ROY.BOY COMPRESS YES, but if not set to Huffman, then the ALTER must be specific: ALTER TABLESPACE ROY.BOY COMPRESS YES HUFFMAN to get the new method.

Indexes

Indexes came in DB2 V9 and is not really the same as tablespace compression at all! Firstly, there is no dictionary as it is all handled in the Db2 I/O engine. It is a „prefix“ compression really and forces a larger index bufferpool size to get it done – this has benefits and costs, of course. The major difference is that the indexes are always compressed on disk down to 4k page size and expanded in the bufferpool up to the 8K, 16K or 32K size you have given them. The other thing to remember, is that index compression is only for leaf pages – nothing else gets compressed.

XML

XML spaces supported „fixed length“ compression in DB2 V9 but also needed a special processor to actually work.

LOB

Yes, you can nowadays also compress LOBs in Db2 12 and above, but it needs a zEDC Express feature installed to do so.

zEDC requires the following:

- z/OS® V2R1 (or later) operating system.

- One of the following:

- IBM® z15®, or later, with the Integrated Accelerator for zEDC

- IBM zEnterprise® EC12 CPC (with GA2 level microcode) or zBC12 CPC, or later, with the zEDC Express feature.

- zEDC software feature enabled in an IFAPRDxx parmlib member. For more information, see Product Enablement for zEnterprise Data Compression.

- Adequate 64-bit real storage that is configured to this z/OS image.

Huffman?

The first compression algorithms were Lempel-Ziv and the dictionary, of typically 4096 entries, each has a 12 bit „key“ for the value to be replaced. This worked great, but over the years newer methods have appeared. One of them is the Huffman Entropy Compression. It is similar to Lempel-Ziv but sorts the data in the dictionary on frequency before assigning a variable number of bits to the value. (This is why the old method is called „fixed length“ of course!) So, if you have millions of THE it would compress down to one single bit! A huge win over the 12 bits for Lempel-Ziv. But remember the great sayings: „It Depends“ and „Your mileage my vary,“ as not all data is good for compression and certainly not all data is good for Huffman compression.

Suck it and see!

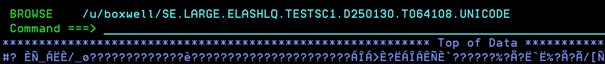

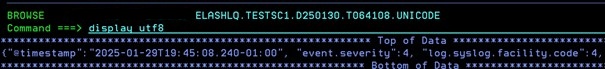

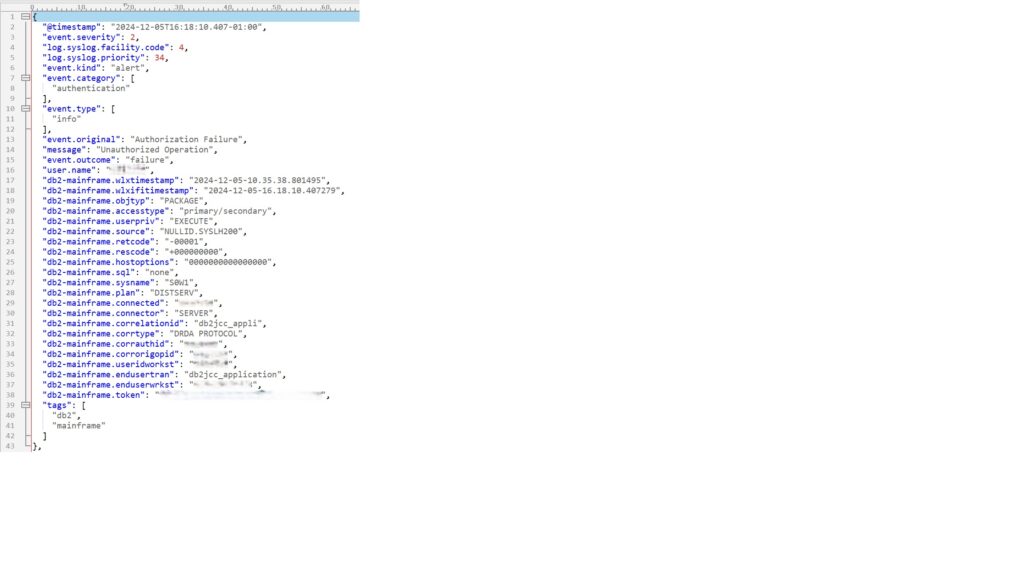

IBM supplied a very nice utility, way back in the day, called DSN1COMP which has become very good over the years and is much more user friendly than when it first came out! Basically, you give it the VSAM cluster name of the object you wish to test. A handful of parameters to give it a clue about what you have defined (FREEPAGE, PCTFREE, PAGESIZE and DSSIZE), and then Bob’s Your Uncle – you get a nice report:

DSN1COMP Idiots Guide

The basic JCL looks like this:

//DSN1COMP EXEC PGM=DSN1COMP,

// PARM='PCTFREE(0),FREEPAGE(0),ROWLIMIT(9999),REORG'

//STEPLIB DD DISP=SHR,DSN=xxxxxx.SDSNEXIT.xxxx

// DD DISP=SHR,DSN=xxxxxx.SDSNLOAD

//SYSPRINT DD SYSOUT=*

//SYSUT1 DD DISP=SHR,DSN=xxxxxx.DSNDBD.dbname.tbspace.I0001.A001The parameters are quite long and complex…

PAGESIZE(nnK) – Must be the page size of the object you are running against. Get it wrong and you „might produce unpredictable results“.

DSSIZE(nnnG) – Must be the DSSIZE of the object you are running against. Get it wrong and you „might produce unpredictable results“.

If you omit these two options, DSN1COMP will attempt to extract the data from the header page. Depending on how old the VSAM/Full image copy dataset is, this data might be found – or not!

NUMPARTS(nnnn) – DSN1COMP assumes the object is not partitioned. Get it wrong and you „might produce unpredictable results“. For UTS data, this parameter is not used as DSSIZE takes care of the requirement.

FREEPAGE(nnn) – This must be set to the current FREEPAGE of the object. From 0 – 255. Default is 0.

PCTFREE(nn) – This must be set to the current PCTFREE of the object. From 0 to 99. Default is 5.

MAXROWS(nnn) – This must be set to the current MAXROWS of object. From 1 to 255. Default is 255.

FULLCOPY – Informs DSN1COMP that the dataset is a full image copy dataset. If it is a partitioned dataset then you must also use the NUMPARTS parameter.

REORG – This switches DSN1COMP from „LOAD“ mode to „REORG“ mode and generally gives a more accurate compression report as DSN1COMP then simulates full record compression and not „after 1.2MB rows“ or whatever internal threshold is met. Even using REORG, you might not get a perfect match with the real compressed data as DSN1COMP uses sampling. Not valid for LOB spaces!

LOB – Informs DSN1COMP that it is now working with a LOB space – If used, *no* other parameters are allowed! You must have the zEDC for this option!

COMPTYPE(x-x) – HUFFMAN, FIXED or ALL. If not specified, DSN1COMP will check for hardware support and output HUFFMAN and FIXED, otherwise just FIXED.

ROWLIMIT(n-n) – An absolute must! From 1 to 99,000,000. Forget this and DSN1COMP will trundle through the *entire* dataset! I would set this to 99999 to begin with.

EXTNDICT(xxxxxxxx) – An eight-byte name for a generated externalized object deck compression dictionary to DD card DSN1DICT. This is not normally used.

LEAFLIM(n-n) – Limits the number of index leaf pages that DSN1COMP reads to calculate index compression rates. From 1 to 99,000,000. Default is all index leaf pages. Remember that index compression only compresses leaf pages. Note that this is the only parameter allowed for index datasets.

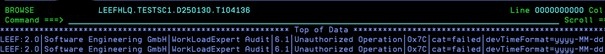

How it looks

DSN1999I START OF DSN1COMP FOR JOB BOXWELL$ STEP1

DSN1998I INPUT DSNAME = xxxxxx.DSNDBD.dbname.tbspace.I0001.A001 , VSAM

DSN1944I DSN1COMP INPUT PARAMETERS

INPUT DATA SET CONTAINS NON-COMPRESSED DATA

4,096 DICTIONARY SIZE USED

0 FREEPAGE VALUE USED

0 PCTFREE VALUE USED

COMPTYPE(ALL) REQUESTED

9,999 ROWLIMIT REQUESTED

ESTIMATE BASED ON DB2 REORG METHOD

255 MAXROWS VALUE USED DSN1940I DSN1COMP COMPRESSION REPORT

HARDWARE SUPPORT FOR HUFFMAN COMPRESSION IS AVAILABLE

+------------------------------+--------------+------------+------------------+

! ! ! Estimated ! Estimated state !

! ! UNCOMPRESSED ! Compressed ! Compressed !

! ! ! FIXED ! HUFFMAN !

+------------------------------+--------------+------------+------------------+

! DATA (IN KB) ! 2,269 ! 582 ! 506 !

! PERCENT SAVINGS ! ! 74%! 77%!

! ! ! ! !

! AVERAGE BYTES PER ROW ! 235 ! 62 ! 54 !

! PERCENT SAVINGS ! ! 73%! 77%!

! ! ! ! !

! DATA PAGES NEEDED ! 589 ! 154 ! 134 !

! PERCENT DATA PAGES SAVED ! ! 73%! 77%!

! ! ! ! !

! DICTIONARY PAGES REQUIRED ! 0 ! 64 ! 64 !

! ROWS ... TO BUILD DICTIONARY ! ! 1,149 ! 1,149 !

! ROWS ... TO PROVIDE ESTIMATE ! ! 9,999 ! 9,999 !

! DICTIONARY ENTRIES ! ! 4,096 ! 4,080 !

! ! ! ! !

! TOT PAGES (DICTNARY + DATA) ! 589 ! 218 ! 198 !

! PERCENT SAVINGS ! ! 62%! 66%!

+------------------------------+--------------+------------+------------------+

DSN1994I DSN1COMP COMPLETED SUCCESSFULLY, 605 PAGES PROCESSED I have edited the report to make it a bit thinner!

As you can easily see, the original dataset is 589 Pages. After „normal“ compression it is down to 218, which is a 62% reduction. However, with Huffman it squeezes down even more into 198 or 66%. So, according to my golden rule, it fits well! Once done and actioned by a REORG, remember to performance-test, as compression can bite you. The SYSIBM.SYSTABLES column PCTROWCOMP is used by the Db2 Optimizer for access plan selection! In other words: Test, Test and Test!

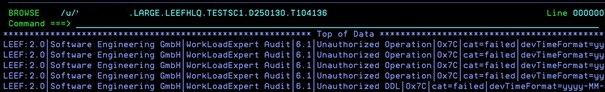

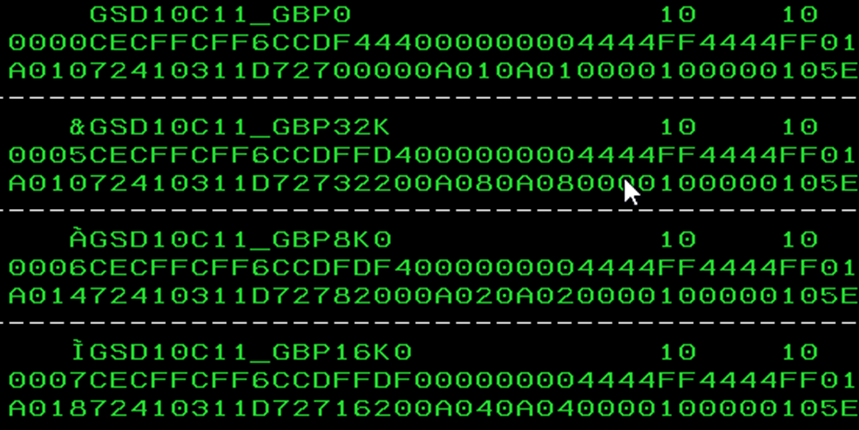

How do Indexes look?

JCL is similar, but different of course, due to the lack of parameters!

//STEP1 EXEC PGM=DSN1COMP,

// PARM='LEAFLIM(9999)'

//STEPLIB DD DISP=SHR,DSN=xxxxxx.SDSNEXIT.xxxx

// DD DISP=SHR,DSN=xxxxxx.SDSNLOAD

//SYSPRINT DD SYSOUT=*

//SYSUT1 DD DISP=SHR,DSN=xxxxxx.DSNDBD.dbname.ixspace.I0001.A001

DSN1999I START OF DSN1COMP FOR JOB BOXWELL$ STEP1

DSN1998I INPUT DSNAME = xxxxxx.DSNDBD.dbname.ixspace.I0001.A001 , VSAM

DSN1944I DSN1COMP INPUT PARAMETERS

PROCESSING PARMS FOR INDEX DATASET:

9,999 LEAF LEAFLIM REQUESTED

DSN1940I DSN1COMP COMPRESSION REPORT

9,999 REQUESTED LEAF LIMIT REACHED

9,999 Index Leaf Pages Processed

621,333 Keys Processed

621,333 Rids Processed

32,096 KB of Key Data Processed

11,947 KB of Compressed Keys Produced

EVALUATION OF COMPRESSION WITH DIFFERENT INDEX PAGE SIZES

----------------------------------------------

8 K Page Buffer Size yields a

51 % Reduction in Index Leaf Page Space

The Resulting Index would have approximately

49 % of the original index's Leaf Page Space

No Bufferpool Space would be unused

----------------------------------------------

----------------------------------------------

16 K Page Buffer Size yields a

63 % Reduction in Index Leaf Page Space

The Resulting Index would have approximately

37 % of the original index's Leaf Page Space

32 % of Bufferpool Space would be unused to

ensure keys fit into compressed buffers

----------------------------------------------

----------------------------------------------

32 K Page Buffer Size yields a

63 % Reduction in Index Leaf Page Space

The Resulting Index would have approximately

37 % of the original index's Leaf Page Space

66 % of Bufferpool Space would be unused to

ensure keys fit into compressed buffers

----------------------------------------------

DSN1994I DSN1COMP COMPLETED SUCCESSFULLY, 9,999 PAGES PROCESSED

Here you see the huge difference in output!

The DSN1COMP computes the three possible new page sizes for your index (8, 16 and 32), which enables you to decide which one is „best“ for the index to live in. In this example, the 8K BP is the clear winner as no space is wasted and it provides over 50% savings.

Problems?

The Db2 optimizer does *not* „know“ that the index is compressed, but it *does* know which bufferpool you have moved it to. Remember to correctly size all of these new bufferpools so that you do not get paging or excessive flushing!

All clear?

Now it is clear: You must still weigh up the pros and cons here! Do not blindly compress the world and expect to save TBs of disk space! The CPU required to decompress/compress is not free, and the Db2 I/O Engines must also work for Index Compression.

Rules Of Thumb

Ignore very small rows – typically, it makes no sense to compress row sizes under 16 at all! Remember you can still only get a maximum of 255 rows in a page (even if compressed!) and so very small rows would hit that limit and make the exercise pointless.

Very large rows – take a row that is 4,000 and let’s say it compress at 45%, leaving you with 2,200 – It still only gets one row in a page. (Previous 4,000 fits due to the page size limit of 4,054 and now with 2,200 still only one is allowed!) In this case, I would change the bufferpool to actually realize any benefits.

There are cases where compressing (or using Huffman to compress more) can negatively affect an application that is using page-level locking.

Obviously, do not compress tablespaces defined with MAXROWS=1.

If the space saving is less than 10% – 20%, it generally makes no sense to use compression.

Db2 only actually does the compression if the row will then be shorter – It could well be, with lots of timestamp fields, that it ends up being the same size and so Db2 saves you CPU by not compressing it.

Compression is *not* encryption and should not be used as such! Furthermore, compressing encrypted data is probably pointless.

LOAD inserts data until a sweet spot is reached, and then it starts to insert compressed data (True for INSERT, MERGE and LOAD SHRLEVEL CHANGE of course).

REORG builds the dictionary in the UNLOAD phase so all rows, if eligible, will be compressed.

To share or not to share?

Sharing dictionaries can be a great idea or a terrible idea – your data decides! In the LOAD statement, if you are loading at PARTITION level, you may add „COPYDICTIONARY nnnn“ to save the time of completely building a dictionary. This only makes sense if you *know* that the dictionary is good for your partition as well.

Keeping Dictionaries

You may decide to save all the time in building dictionaries by adding KEEPDICTIONARY to the LOAD or REORG utility cards. The only problem here, is sometimes data does change and a new dictionary will be required.

And what about Indexes?

Well, I tend to like the larger bufferpool here just to help reduce index page splits. So going to 8K and above can have a very nice knock-on effect into general performance and logging.

Looking for Candidates?

I would start with all big table partitions (over 199MB) that are not compressed at all and also perhaps take another look at any table partitions that compressed badly. Perhaps Huffman or rebuilding the dictionary could help? For indexes I would just list out the uncompressed ones larger than 99MB. Feel free to change the values!

Here’s some simple SQL to list out the interesting cases. For table partitions and tablespaces:

SELECT RTS.DBNAME

, RTS.NAME

, RTS.PARTITION

, RTS.SPACE / 1024 AS MB

, TS.INSTANCE

, TP.IPREFIX

, TP.COMPRESS

, TP.PAGESAVE

FROM SYSIBM.SYSTABLESPACESTATS RTS

,SYSIBM.SYSDATABASE DB

,SYSIBM.SYSTABLESPACE TS

,SYSIBM.SYSTABLEPART TP

WHERE RTS.DBNAME = TS.DBNAME

AND NOT RTS.DBNAME LIKE 'DSNDB0_'

AND RTS.NAME = TS.NAME

AND RTS.DBNAME = DB.NAME

AND RTS.INSTANCE = TS.INSTANCE

AND NOT DB.TYPE = 'W'

AND RTS.DBNAME = TP.DBNAME

AND RTS.NAME = TP.TSNAME

AND RTS.PARTITION = TP.PARTITION

AND RTS.SPACE / 1024 > 199

AND (TP.COMPRESS = ' '

OR (TP.COMPRESS IN ('Y' , 'F')

AND TP.PAGESAVE < 20))

ORDER BY 4 DESC, 1 , 2 , 3

FOR FETCH ONLY

WITH UR

;

For indexes:

SELECT RTS.DBNAME

, RTS.INDEXSPACE

, RTS.PARTITION

, RTS.SPACE / 1024 AS MB

, RTS.NLEAF

, RTS.INSTANCE

, IP.IPREFIX

FROM SYSIBM.SYSINDEXSPACESTATS RTS

,SYSIBM.SYSINDEXES IX

,SYSIBM.SYSINDEXPART IP

WHERE RTS.NAME = IX.NAME

AND NOT RTS.DBNAME LIKE 'DSNDB0_'

AND RTS.CREATOR = IX.CREATOR

AND RTS.PARTITION = IP.PARTITION

AND IX.CREATOR = IP.IXCREATOR

AND IX.NAME = IP.IXNAME

AND RTS.SPACE / 1024 > 99

AND IX.COMPRESS = 'N'

ORDER BY 4 DESC, 1 , 2 , 3

FOR FETCH ONLY

WITH UR

; What are your thoughts or experiences with compression? I would love to hear from you!

TTFN,

Roy Boxwell