Hi all! Welcome to the end-of-year goody that we traditionally hand out to guarantee you have something to celebrate at the end-of-year party! This time, I wish to introduce to you a full vulnerability check of your Db2 for z/OS systems.

DORA!

You should all be aware, and scared, of DORA by now. If not, read my prior newsletter 2024-11 DORA or check out my IDUG presentation or my recorded webinar. Whatever you do, you must get up to speed with DORA as it comes into force on the 17th Jan 2025 which is only one month away from the publishing date of this newsletter!

Not just Born in the USA!

Remember, DORA is valid for the whole wide world, not just businesses within the EU. If you do *any* sort of financial trading within the EU you are under the remit of DORA, just like you are with GDPR! Even if you are not trading within the EU block, doing a full vulnerability check is still a pretty good idea!

PCI DSS V4.0.1

We also now have the Payment Card Industry Data Security Standard (PCI DSS) V4.0.1 going live at the end of March 2025… Coincidence? I don’t think so. Mind you, at least the Americans do not fine anyone who fails!

What are We Offering?

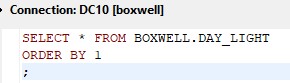

This year, the product is called the SecurityAudit HealthCheck for Db2 z/OS or SAC2 for short. It is a very lightweight and robust tool which basically does all of the CIS Vulnerability checks as published by the Center for Internet Security (CIS) in a document for Db2 13 on z/OS:

CIS IBM Z System Benchmarks (cisecurity.org)

https://www.cisecurity.org/benchmark/ibm_z

This contains everything you should do for audit and vulnerability checking and is well worth a read!

Step-By-Step

First Things First!

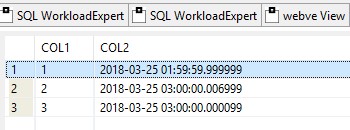

The first thing SAC2 does, is evaluate *all* security-relevant ZPARMs and report which ones are not set to a „good“ value. It then goes on to check that any default values have not been left at the default value. This especially means the TCP/IP Port number, for example. Then it finishes off by validating that SSL has been switched on for TCP/IP communications and that any and all TCP/IP ALIAS defs also have the correct settings.

Communication is Important!

Next up, is a full evaluation of your Communication Data Base (CDB). This data has been around for decades and started life for SNA and VTAM connections between Host Db2s. These days, SNA is dead and most of the connections are coming from PCs or Servers. That means that there *could* be a lot of dead data in the CDB and, even worse, ways of connecting to your mainframe that you did not even know, or forgot, existed! Think plain text password with SQLID translation for example!

Danger in the Details

Naturally, blindly changing CDB rows is asking for trouble, and if SAC2 finds anything odd/old/suspicious here, you must create a project to start removal. There is a strong correlation between „Oh I can delete that row!“ and „Why can’t any of my servers talk to the mainframe anymore?“. The tool points out certain restrictions and pre-reqs that have to be done *before* you hit the big button on the wall! JDBC version for example.

Taking it All for GRANTed?

GRANTs can be the root of all evil! GRANT TO PUBLICs just make auditors cry, and use of WITH GRANT OPTION makes them jump up and down. Even IBM is now aware that blanket GRANTing can be dangerous for your health! SAC2 analyzes *all* GRANTs to make sure that PUBLIC ones are discovered on the Catalog and Directory as these should NEVER be done (with the one tiny exception of, perhaps on a good day when the sun is shining, the SYSIBM.SYSDUMMY1), then further checking all User Data as PUBLIC is just lazy. Checking everything for WITH GRANT OPTION is just making sure you are working with modern security standards!

Fun Stuff!

These days you should be using Trusted Contexts to access from outside the Host. This then requires Roles and all of this needs tamper-proof Audit Policies. On top of all this are the extra bits and pieces of Row Permissions and Column Masks. All of these must be validated for the auditors!

Elevated Users?

Then it lists out the group of privileged User IDs. These all have elevated rights and must all be checked in detail as who has what and why?

Recovery Status Report

Finally, it lists out a full Recovery Status Report so that you can be sure that, at the time of execution, all of your data was Recoverable.

It is a Lot to Process!

It is indeed. The first time you run it, you might well get tens of thousands of lines of output but the important thing is to run it and break it down into little manageable sections that different groups can then work on. This is called „Due Diligence“ and can save your firm millions of euros in fines.

Lead Overseer

Great job title, but if this person requests data then you have 30 days to supply everything they request. Not long at all! SAC2 does the lion’s share of the work for you.

Again and Again and Again

Remember, you must re-run this vulnerability check on a regular basis for two major reasons:

- Things change – Software, Malware, Attackers, Defenders, Networks, Db2 Releases etc.

- Checks get updated – The auditors are alway looking for more!

Stay Out of Trouble!

Register, download, install and run today!

I hope it helps you!

TTFN

Roy Boxwell

Future Updates:

The SAC2 licensed version will be getting upgraded in the first quarter of 2025 to output the results of the run into a Comma Separated File (CSV) to make management reporting and delegation of projects to fix any found problems easier. It will also get System Level Backup (SLB) support added. SLB is good but you *still* need Full Image Copies! Further, it will be enhanced to directly interface with our WLX Audit product.