Lately I have been asked a lot about skip level migration and so, even though I was surprised that people are *still* on DB2 V8 (Even the nails have rusted on the perch…), I have dug out this “old” marketing/whitepaper below as I thought it could actually make a nice newsletter as a final last gasp info output for DB2 V8…

Obviously this is not written in the normal “Roy” style as it is primarily a marketing paper but there is still good data in it – and next month we will be back in the land of technical newsletters – I promise!

So here it is DB2 10 Skip Level Migration:

DB2 10 Skip Level Migration

A speedier way to DB2 10

For the second time in the life cycle of DB2 z/OS, IBM supports a version jump for the migration – now from version 8 to version 10. It was also possible to leave out a version ten years ago, namely the jump from version 5 to version 7. The direct jump from DB2 version 8 to DB2 version 10 is referred to as a „Skip-Level Migration„.

During the DB2 V10 beta program, this function was available to selected customers; and it has been intensively tested and is a great option for DB2 customers that are currently on DB2 version 8 (I sincerely hope that is not many!). If a site plans the skip migration ahead and prepares it wisely, the migration from V8 to DB2 10 can be as smooth as other migration scenarios. IBM estimates that the project duration for the migration should take around one and one-half times as long as it would take for a simple DB2 migration. However, a skip-level migration carries a much greater risk because software updates for two versions will be simultaneously implemented.

General recommendations

This newsletter describes some special aspects to be considered when performing a skip-level migration and rounds out the information provided by IBM.

IBM’s best practices contain a customer recommendation for detailed migration planning as well as an extensive test strategy.

It is especially important to ascertain the current service levels of all applications when conducting this special type of migration. The measures described in this newsletter should support this.

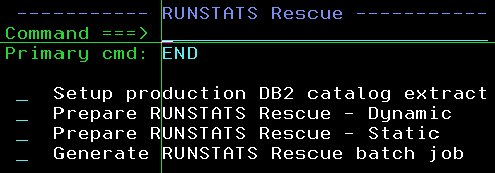

Test & review RUNSTATS

Regarding applications, IBM’s best practices strongly recommend that customers test and review their RUNSTATS procedures in order to guarantee that the DB2 Optimizer can choose efficient access paths by using all of the required information in the DB2 catalog (including the new stats in 9).

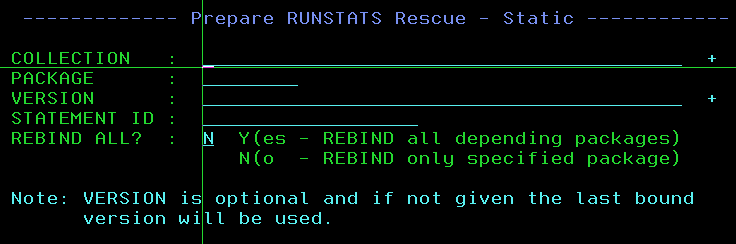

REBIND

Version 10 – as well as Version 9 – contains a number of Optimizer enhancements that can/will drastically improve performance, which is realized after the migration by REBINDing all packages (if static SQL is available). For dynamic SQL, the new Optimizer code will be used instantly. For several reasons, customers should plan to REBIND all static application packages as part of the skip-level migration. The changes of the static access paths can be cross checked based on the EXPLAIN information in the PLAN_TABLEs. Dynamic SQL access paths can be compared using the dynamic statement cache (DSC) as a base for an EXPLAIN.

Access Path Changes Due to Optimizer Enhancements

Part of the DB2 migration is the very time-consuming process to perform the necessary checks and validations for all of the current access paths. With skip-level migration, additional attention to the changed Optimizer behavior is strongly recommended.

Unfortunately, the access path changes caused by a migration are not predictable in advance. Or are they?

The Early Precheck

Each access path depends upon the rules and algorithms of the Optimizer and the DB2 version-dependent code.

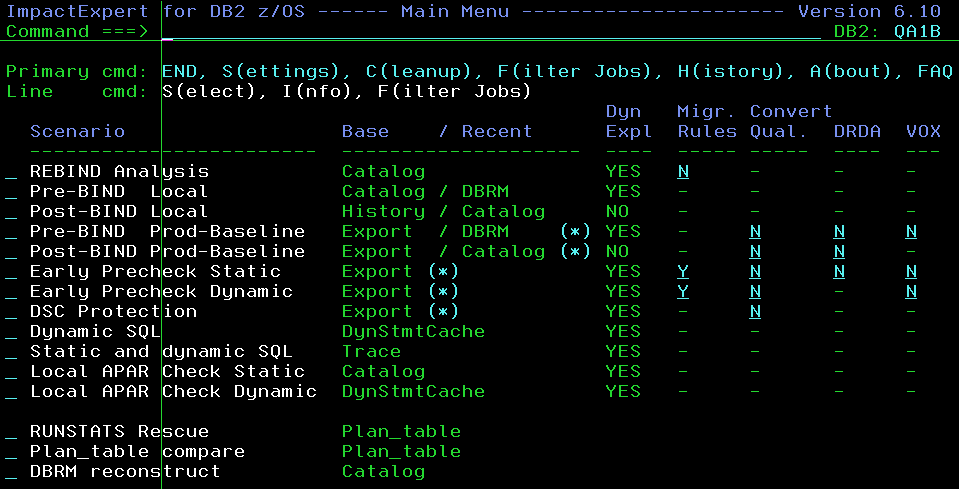

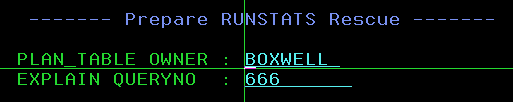

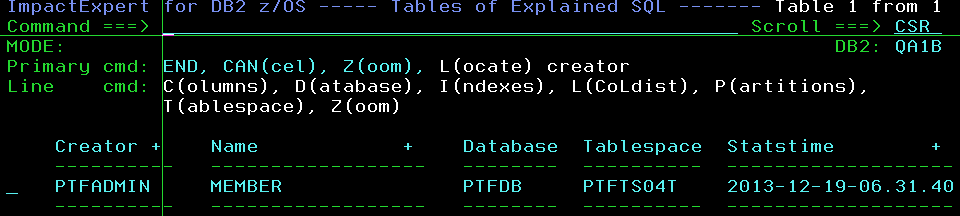

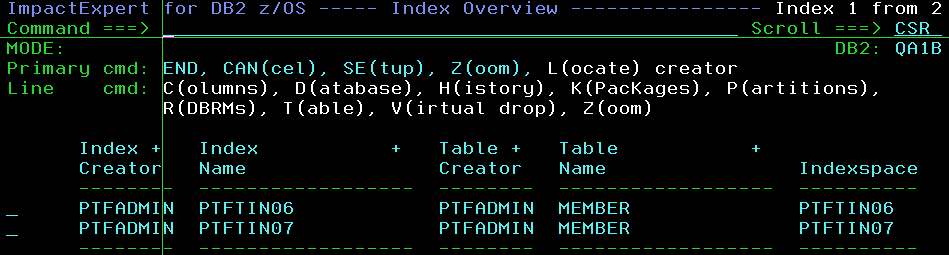

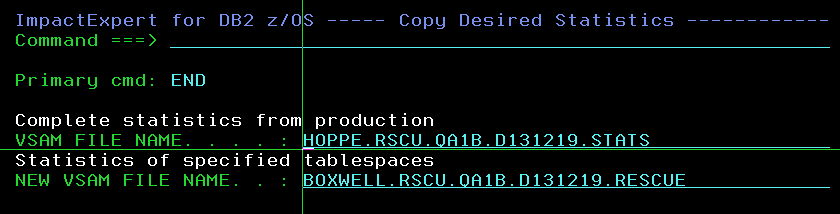

By comparing all version 8 access paths with the resulting access paths of version 10, the tool Bind ImpactExpert (BIX) identifies the changes necessary for the new Optimizer in advance.

DB2 applies several version-specific optimizations to the internal executable code of an access path. Thus, it is important to reactivate those performance options – fast column processing a.k.a. SPROCS (selects) and UPROCS (updates) etc. – because the options get “lost” during a migration without REBINDs.

One important thing to mention here: IBM automatically REBINDs all packages that are from version 5 and older. To stay in control of the access paths during the AUTO-REBIND: BIX-IT!

Bind ImpactExpert (BIX) safely ensured performance stability during migrations from V7 to V8 and from V8 to V9. According to individual service levels of applications being affected by the migration, the tool prechecks the whole environment or just a subset that requires special attention. At a number of customer installations, so-called Optimizer “patterns” were determined (patterns classify different types of access path changes).

Some examples:

– Index accesses change to table space scans for small tables

– Non-matching index scans change to table space scans for large indexes

The functional changes to RUNSTATS utilities in DB2 9 will also have an impact on the access paths since catalog statistics will change after executing the new RUNSTATS.

Version 8 also extended the functionality of the RUNSTATS utility. Adapting your RUNSTATS strategies accordingly can correct access path degradations that are discovered.

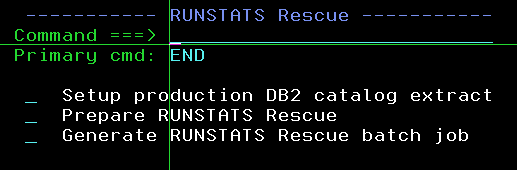

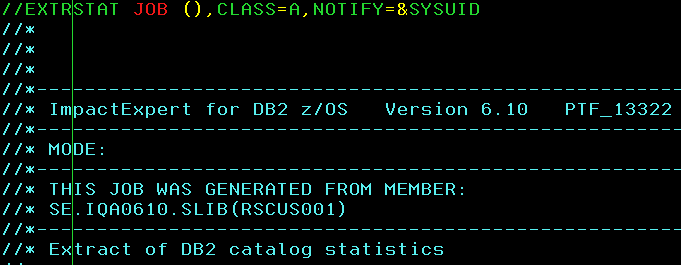

Preparing Suitable Catalog Statistics Prior to Migration

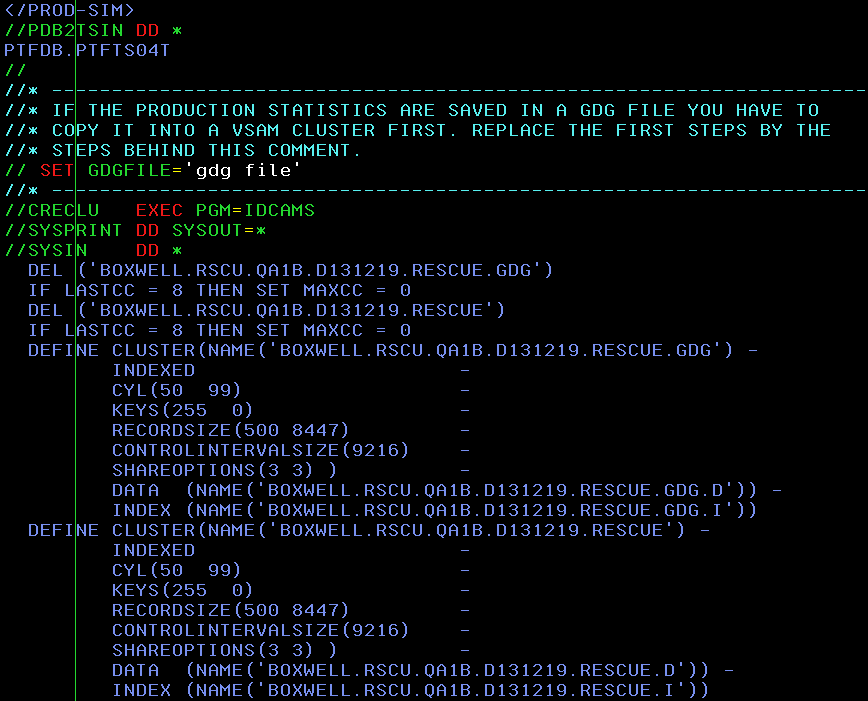

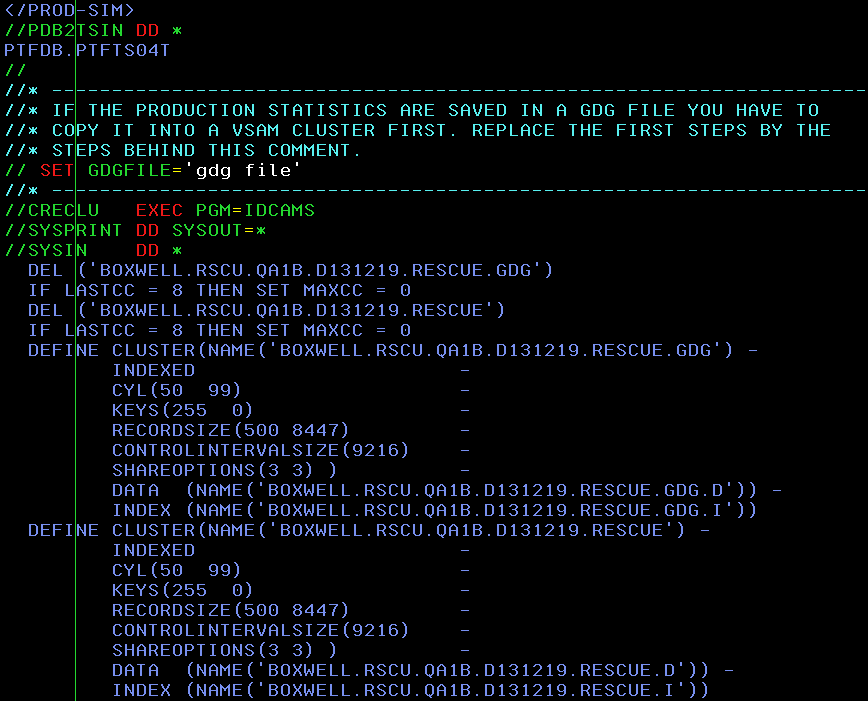

When preparing for a migration, special attention should be paid to your RUNSTATS procedures.

On the one hand, the Optimizer requires detailed statistics in order to choose (more) efficient access paths. On the other hand, after the migration, it is necessary to REBIND all packages in order to activate the new DB2 10 Optimizer code. Due to the amount of time required to execute RUNSTATS for all objects, it is not advisable to perform this on the day of the migration when entering DB2 conversion mode (CM). A RUNSTATS instantly invalidates the access paths in a DSC for dynamic SQL.

What is the best way to prepare the migration with RUNSTATS without risking the DSC?

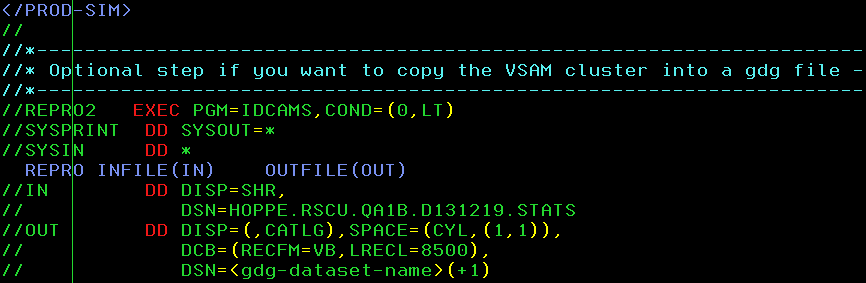

Bind ImpactExpert’s DSC Protection allows the execution of RUNSTATS for all objects under DB2 Version 8 without any risk by preventing unwanted invalidation of the DSC. This means there will be no uncontrolled access path changes for dynamic SQL during the preparation phase. With static SQL, the Optimizer determines (new) access paths the REBIND is executed.

What is a practical method to compare all V8 and DB2 10 access paths?

Bind ImpactExpert categorizes each SQL statement into:

- Same access paths (or unimportant changes)

- Improved access paths

- Degraded access paths

- Changed access paths (not automatically rated)

Is your RUNSTATS strategy under version 8 prepared for the new challenges of the DB2 Optimizer under Version 10?

Comparing access paths with Bind ImpactExpert before the migration will help with the preparations.

How do Optimizer patterns specifically influence your applications in your DB2 system?

This too is analyzed and answered by Bind ImpactExpert.

Enhancements to Bind ImpactExpert support skip-level migration. It provides extended rules and standards as well as results categories.

Using Bind ImpactExpert, the first analysis of the access path methods between versions 8 and 10 show results similar to those found in the past. However, the percentage of changes made during a skip-level migration is much higher.

During a “regular” migration, only 5% to 30% of all statements (dynamic and static) have access path changes. This number can double during skip-level migration.

Using APCOMPARE/APREUSE in DB2 10

There are two new REBIND options in DB2 10 that allow you to compare and reuse access paths (APCOMPARE and APREUSE).

These options suppress a REBIND execution in the event of an access path change. However, unless the packages were bound or rebound in DB2 9 NF, these options do not support skip-level migration. Using them later (like in your daily maintenance procedure) is also highly controversial because they only know that access paths have “changed”; thereby suppressing REBINDs for access path improvements as well.

Using Package and Access Path Stability after the Migration

Version 9 introduced Plan Management, which is also known as either package or access path stability. This is available as a REBIND PACKAGE parameter and as a ZPARM. In DB2 10, an enhancement to this function provides you with the option to save the old version 8 access paths when performing the REBIND. If required, an access path can be rolled back to the old access path. PLANMGMT(EXTENDED), for example, has 3 access path versions (CURRENT, PREVIOUS, and ORIGINAL).

Bind ImpactExpert conveniently includes Plan Management in the skip-level migration so a reversal of degraded access paths is possible at any time.

It reduces the overhead associated with this feature by up to 90% by limiting its use to those access paths that have degraded. For complete details about Plan Management, refer to SOFTWARE ENGINEERING’s white paper title “Package Stability“ – A great feature with some weak points”.

This Bind ImpactExpert function – which was so useful during migrations from V8 to DB2 9 – is also usable for skip-level migration.

Summary

DB2 10 offers significant performance improvements. To get the most out of DB2 10 improvements, global REBINDs are a must. With Bind ImpactExpert, you can predict access paths before you migrate and avoid surprises in a production DB2 10 environment.

As usual any questions or comments are welcome,

TTFN Roy Boxwell

Senior Software Architect