We recently held one of our Design Councils, this one was all about Db2 12 and going Agile. I was asked the following question after one of my presentations about verifying Db2 Code/Catalog/Function Levels:

“How many APARs really can affect access paths?”

I had to admit that I did not know the answer – I *hate* not knowing things, so I set off to find out how many Db2 Optimizer-relevant APARs there were in 2016 up until today (Oct 17th 2017).

Db2 12 APAR review sqlaccesspath

First I used this search argument, as I am only interested in Db2, sqlaccesspath keyword related APARs, those that have an Optimizer relevant CSECT, are HIPER and in Db2 12:

5740xyr00 AND sqlaccesspath AND dsnxo* AND yesHIPER AND C10

1. PI69349: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query 2017-04-14

Remove the yesHIPER and you get 22:

1. PI82797: LESS FILTERING INDEX SELECTED FOR INNER TABLE OF NESTED LOOP JOIN

A smaller, less filtering index is selected for the inner table with a Nested Loop Join. 2017-10-02

2. PI78122: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-10-02

3. PI83289: QUERY ACCESS PATH MAY BE UNPREDICTABLE FOR A QUERY WHERE THERE ARE EQUAL PREDICATES COVERING ALL THE COLUMNS ...

Query access path may be unpredictable for a query where there are equal predicates covering all the columns of a unique index. 2017-09-02

4. PI83454: INEFFICIENT ACCESS PATH IS USED BECAUSE OF AN INCORRECT COMPOUND FILTER FACTOR OF RANGE PREDICATES

The compound filter factor of two range predicates on the same column is underestimated, that causes an inefficient access 2017-09-02

5. PI83547: NON-MATCHING INDEX CHOSEN WHEN A MATCHING INDEX EXISTS

A non-matching index could be chosen when an index with good matching exists. 2017-09-02

6. PI82634: MULTI INDEX ACCESS CHOSEN WHEN A BETTER MATCHING INDEX EXISTS

MULTI-INDEX ACCESS WAS CHOSEN WHEN A MORE EFFICIENT INDEX USING BETTER MATCHING EXISTS. 2017-08-02

7. PI77792: ABSTRACT:INEFFICIENT ACCESS PATH WITH SORT DISTINCT IN CTE

AN INEFFICIENT ACCESS PATH CAN BE CHOSEN WHEN A SORT FOR DISTINCT IS NEEDED INSIDE A CTE. 2017-06-02

8. PI73290: Db2 FOR Z/OS USERS OF QUERIES WITH GROUP BY, DISTINCT, IN SUBQUERY OR NOT IN SUBQUERY.

Db2 may choose an inefficient access path as non-matching index access without matching predicates and screening predicates when 2017-05-01

9. PI73368: ABSTRACT=INEFFICIENT ACCESS PATH WITH SINGLE VALUE PAGE RANGE PREDICATE.An inefficient access path can be chosen when page range access is used for a join predicate and the columns has a cardinality 2017-05-01

10. PI75966: INEFFICIENT ACCESS PATH IS SELECTED.

List prefetch plan with higher cost may be chosen mistakenly when there is one table in the query block and the index covers 2017-05-01

11. PI71368: R-SCAN ACCESS PATH CHOSEN OVER MULTI-INDEX ACCESS FOR A TABLE WHICH QUALIFIES FOR NPGTHRSH BEHAVIOR

R-scan access path chosen over multi-index access for a table which qualifies for NPGTHRSH behavior. 2017-04-20

12. PI75963: ACCESS PATH FOR A QUERY WITH GROUP BY AND ORDER BY CLAUSES INCLUDES AN UNNECESSARY SORT

For the following query the access path includes a sort although it is not necessary: 2017-04-20

13. PI69349: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query 2017-04-14

14. PI74019: DEFECT 40316 - TPCD (REVISTIT) QUERY #UV1B2 CL2 CPU REGRESSION IN V12

There is a regression for performance test. In V11, it could choose good access path, in V12, it chooses bad access path. 2017-04-03

15. PI72887: INEFFICIENT ACCESS PATH WITH EARLY OUT

INEFFICIENT ACCESS PATH WITH EARLY OUT 2017-04-03

16. PI71495: ABEND04E RC00E70005 AT DSNXGRDS DSNXOB2 M105 ON SQL STATEMENT WITH CONCENTRATE STATEMENTS WITH LITERALS

ABEND04E rc00e70005 at dsnxgrds dsnxob2 M105 in prepare SQL statement with attributes clause CONCENTRATE STATEMENTS WITH 2017-02-01

17. PI72800: INCORRECT FILTER FACTOR FOR PARTITION KEY OF VOLATILE TABLE

Db2 may calculate a incorrect filter factor for using BETWEEN and RANGE predicates, when a volatile table is defined as 2017-02-01

18. PI71110: FORWARDFIT OF PI70237

Performance problem when a user query choose R-scan in a single-table correlated subquery. 2017-02-01

19. PI68238: SQLACCESSPATH OF THE ENTRY WITH LATEST TIMESTAMP AND ACCESSTYPE NR IS NOT SELECTED WHEN BIND PKG WITH OPTHINT ...

When BIND PACKAGE with OPTHINT and EXPLAIN(YES), the latest timestamp NR path will lost the chance to compete with other 2017-01-12

20. PI68551: FF OF PI66289-INDEX WITH LESS MATCHING COLUMNS IS CHOSEN FOR INNER TABLE WHICH MAY CAUSE BAD PERFORMANCE

Index with less matching columns is chosen for inner table which may cause bad performance 2017-01-12

21. PI69414: POOR SQL PERFORMANCE FOR MERGE STATEMENT

Poor SQL performance for MERGE statement 2017-01-03

22. PI68086: ALLOW MORE TABLES TO BE ELIGIBLE FOR SPARSE INDEX ACCESS.

Due to a code bug, sometimes a table with very big non-correlated subquery (big means expensive in terms of elapsed 2016-12-01

Remove the filter for optimizer csects and you get 26:

1. PI82797: LESS FILTERING INDEX SELECTED FOR INNER TABLE OF NESTED LOOP JOIN

A smaller, less filtering index is selected for the inner table with a Nested Loop Join. 2017-10-02

2. PI78122: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-10-02

3. PI83289: QUERY ACCESS PATH MAY BE UNPREDICTABLE FOR A QUERY WHERE THERE ARE EQUAL PREDICATES COVERING ALL THE COLUMNS ...

Query access path may be unpredictable for a query where there are equal predicates covering all the columns of a unique index. 2017-09-02

4. PI83454: INEFFICIENT ACCESS PATH IS USED BECAUSE OF AN INCORRECT COMPOUND FILTER FACTOR OF RANGE PREDICATES

The compound filter factor of two range predicates on the same column is underestimated, that causes an inefficient access 2017-09-02

5. PI83547: NON-MATCHING INDEX CHOSEN WHEN A MATCHING INDEX EXISTS

A non-matching index could be chosen when an index with good matching exists. 2017-09-02

6. PI82634: MULTI INDEX ACCESS CHOSEN WHEN A BETTER MATCHING INDEX EXISTS

MULTI-INDEX ACCESS WAS CHOSEN WHEN A MORE EFFICIENT INDEX USING BETTER MATCHING EXISTS. 2017-08-02

7. PI77792: ABSTRACT:INEFFICIENT ACCESS PATH WITH SORT DISTINCT IN CTE

AN INEFFICIENT ACCESS PATH CAN BE CHOSEN WHEN A SORT FOR DISTINCT IS NEEDED INSIDE A CTE. 2017-06-02

8. PI73290: Db2 FOR Z/OS USERS OF QUERIES WITH GROUP BY, DISTINCT, IN SUBQUERY OR NOT IN SUBQUERY.

Db2 may choose an inefficient access path as non-matching index access without matching predicates and screening predicates when 2017-05-01

9. PI75966: INEFFICIENT ACCESS PATH IS SELECTED.

List prefetch plan with higher cost may be chosen mistakenly when there is one table in the query block and the index covers 2017-05-01

10. PI73368: ABSTRACT=INEFFICIENT ACCESS PATH WITH SINGLE VALUE PAGE RANGE PREDICATE.

An inefficient access path can be chosen when page range access is used for a join predicate and the columns has a cardinality 2017-05-01

11. PI71368: R-SCAN ACCESS PATH CHOSEN OVER MULTI-INDEX ACCESS FOR A TABLE WHICH QUALIFIES FOR NPGTHRSH BEHAVIOR

R-scan access path chosen over multi-index access for a table which qualifies for NPGTHRSH behavior. 2017-04-20

12. PI75963: ACCESS PATH FOR A QUERY WITH GROUP BY AND ORDER BY CLAUSES INCLUDES AN UNNECESSARY SORT

For the following query the access path includes a sort although it is not necessary: 2017-04-20

13. PI67390: SQLCODE100 MAY OCCUR FOR SQL STATEMENTS USING LIST PREFETCH OR MULTI INDEX ACCESS

SQLCODE +100 may occur for sql statements using List Prefetch or multi index access 2017-04-14

14. PI69349: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query 2017-04-14

15. PI69054: TOTALENTRIES TRUNCATED IN RTS FOR LARGE LOAD RESULTS IN REBUILD INDEX FAILING

2.6 billion row table load is truncating totalentries in RTS. 2017-04-12

16. PI74019: DEFECT 40316 - TPCD (REVISTIT) QUERY #UV1B2 CL2 CPU REGRESSION IN V12

There is a regression for performance test. In V11, it could choose good access path, in V12, it chooses bad access path. 2017-04-03

17. PI76121: REMOTE CONNECTION ATTEMPT RESULTS IN NONMATCHING INDEX SCAN OF DSNFEX01 WHEN ROW FOR AUTHID IS MISSING FROM ...

Db2DDF See APAR PI71693 for Db2 11/10 for z/OS. 2017-04-03

18. PI72887: INEFFICIENT ACCESS PATH WITH EARLY OUT

INEFFICIENT ACCESS PATH WITH EARLY OUT 2017-04-03

19. PI71495: ABEND04E RC00E70005 AT DSNXGRDS DSNXOB2 M105 ON SQL STATEMENT WITH CONCENTRATE STATEMENTS WITH LITERALS

ABEND04E rc00e70005 at dsnxgrds dsnxob2 M105 in prepare SQL statement with attributes clause CONCENTRATE STATEMENTS WITH 2017-02-01

20. PI72800: INCORRECT FILTER FACTOR FOR PARTITION KEY OF VOLATILE TABLE

Db2 may calculate a incorrect filter factor for using BETWEEN and RANGE predicates, when a volatile table is defined as 2017-02-01

21. PI71110: FORWARDFIT OF PI70237

Performance problem when a user query choose R-scan in a single-table correlated subquery. 2017-02-01

22. PI68238: SQLACCESSPATH OF THE ENTRY WITH LATEST TIMESTAMP AND ACCESSTYPE NR IS NOT SELECTED WHEN BIND PKG WITH OPTHINT ...

When BIND PACKAGE with OPTHINT and EXPLAIN(YES), the latest timestamp NR path will lost the chance to compete with other 2017-01-12

23. PI68551: FF OF PI66289-INDEX WITH LESS MATCHING COLUMNS IS CHOSEN FOR INNER TABLE WHICH MAY CAUSE BAD PERFORMANCE

Index with less matching columns is chosen for inner table which may cause bad performance 2017-01-12

24. PI69414: POOR SQL PERFORMANCE FOR MERGE STATEMENT

Poor SQL performance for MERGE statement 2017-01-03

25. PI68086: ALLOW MORE TABLES TO BE ELIGIBLE FOR SPARSE INDEX ACCESS.

Due to a code bug, sometimes a table with very big non-correlated subquery (big means expensive in terms of elapsed 2016-12-01

26. PI69029: REBIND APREUSESOURCE(PREVIOUS) IS NOT FINDING PREVIOUS ACCESSPATH WHEN USING PLANMGMT(BASIC)

When running this sample statement: REBIND PACKAGE(TEST.ABC00999.()) EXPLAIN(Y) - 2016-12-01

So we are talking about just over two per month…

Db2 11 APAR Review sqlaccesspath

Just drop the C10 and add B10 to the search and do it all again.

1. PI78532: NON-MATCHING INDEX CHOSEN WHEN A MATCHING INDEX EXISTS 17/08/30 PTF PECHANGE

A non-matching index could be chosen when an index with good matching exists. 2017-08-30

2. PI58411: INCORROUT CAN OCCUR FOR AN UPDATE QUERY USING TEMPORAL TABLES.

Temporal table has multiple indexes. One index to support the primary key constraint and one to support queries against the 2017-01-04

3. PI62713: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query with LEFT OUTER JOIN and ORDER BY and also running with Sort 2016-11-02

4. PI64779: SELECT DISTINCT RETURNS DUPLICATE VALUES.

Incorrect output can occur when the DISTINCT keyword is used, matching index access is used on the IN-list, but the IN-list 2016-10-03

5. PI61893: INCORROUT WITH UPDATE STATEMENT AND CORRELATED SUBQUERY

A INCORROUT conditions occurs when a UPDATE statement is coded with a correlated subquery that contains a DISTINCT and FETCH 2016-08-02

Again, remove the yesHIPER and now there are 54:

1. PI79438: INEFFICIENT ACCESS PATH FOR QUERY WITH MIN/MAX AND NO GROUP BY

An inefficient access path can be chosen when a query contains a MIN/MAX function with no Group BY. 2017-10-02

2. PI84286: JOIN PREDICATE WITH CAST FUNCTION NON-INDEXABLE

A join predicate can become non-indexable if the predicate contains a decimal function without the length and scale 2017-10-02

3. PI83769: AN INEFFICIENT INDEX CAN BE SELECTED TO PARTICIPATE IN MULTI-INDEX ACCESS

AN INEFFICIENT INDEX CAN BE SELECTED TO PARTICIPATE IN MULTI-INDEX ACCESS WHEN THERE IS A HIGH DEGREE OF UNCERTAINTY 2017-09-26

4. PI82601: LESS FILTERING INDEX SELECTED FOR INNER TABLE OF NESTED LOOP JOIN

A smaller, less filtering index is selected for the inner table with a Nested Loop Join. 2017-09-02

5. PI82772: INEFFICIENT ACCESS PATH IS USED BECAUSE OF AN INCORRECT COMPOUND FILTER FACTOR OF RANGE PREDICATES

The compound filter factor of two range predicates on the same column is underestimated, that causes an inefficient access 2017-09-02

6. PI63607: INEFFICIENT ACCESS PATH COULD OCCUR WITH CARTESIAN JOIN

An inefficient access path could occur when the result of a Cartesian join does not produce better index matching on the 2017-08-30

7. PI78532: NON-MATCHING INDEX CHOSEN WHEN A MATCHING INDEX EXISTS 17/08/30 PTF PECHANGE

A non-matching index could be chosen when an index with good matching exists. 2017-08-30

8. PI79775: INDEX PROBING NOT BEING INVOKED

Under certain conditions, index probing is not being utilized for predicates that calculate a FF with no matching rows 2017-08-02

9. PI80690: AE PI76369 FIX COMPLETION

AE PI76369 fix completion. 2017-07-05

10. PI76369: ABSTRACT:INEFFICIENT ACCESS PATH WITH SORT DISTINCT IN CTE

AN INEFFICIENT ACCESS PATH CAN BE CHOSEN WHEN A SORT FOR DISTINCT IS NEEDED INSIDE A CTE. 2017-06-05

11. PI75212: DURING AUTOBIND USING QUERYACCELERATION (ENABLE) BIND OPTION, STATIC QUERY IS BOUND FOR ACCELERATION ...

During AUTOBIND for a package bound QUERYACCELERATION(ENABLE), Db2 unexpectedly selects an IDAA access path and binds the query 2017-06-02

12. PI59793: UNDERESTIMATED TOTAL INDEX FILTERING WITH PAGE RANGE SCREENING WHEN THE PAGE RANGE COLUMNS ARE NOT IN THE INDEX

An inaccurate IMFFADJ value will be generated in instances where when page range screening is performed and the columns used for 2017-05-03

13. PI62376: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-04-11

14. PI75342: ACCESS PATH FOR A QUERY WITH GROUP BY AND ORDER BY CLAUSES INCLUDES AN UNNECESSARY SORT.

For the following query the access path includes a sort although it is not necessary: 2017-04-03

15. PI72177: INEFFICIENT ACCESS PATH IS SELECTED.

List prefetch plan with higher cost may be chosen mistakenly when there is one table in the query block and the index covers 2017-04-03

16. PI70394: ABSTRACT=INEFFICIENT ACCESS PATH WITH SINGLE VALUE PAGE RANGE PREDICATE.

An inefficient access path can be chosen when page range access is used for a join predicate and the columns has a cardinality 2017-03-09

17. PI73338: INEFFICIENT JOIN ACCESS ON PARTITIONED TABLE

Nested loop join may be chosen as the join type when hybrid join would likely have been a better choice. 2017-02-01

18. PI66289: INDEX WITH LESS MATCHING COLUMNS IS CHOSEN FOR INNER TABLE WHICH MAY CAUSE BAD PERFORMANCE

Index with less matching columns is chosen for inner table which may cause bad performance 2017-01-12

19. PI65041: SQLACCESSPATH OF THE ENTRY WITH LATEST TIMESTAMP AND ACCESSTYPE NR IS NOT SELECTED WHEN BIND PKG WITH OPTHINT ...

When BIND PACKAGE with OPTHINT and EXPLAIN(YES), the latest timestamp NR path will lost the chance to compete with other 2017-01-12

20. PI58411: INCORROUT CAN OCCUR FOR AN UPDATE QUERY USING TEMPORAL TABLES.

Temporal table has multiple indexes. One index to support the primary key constraint and one to support queries against the 2017-01-04

21. PI69685: ABEND04E RC00E70005 AT DSNXGRDS DSNXOB2 M105 ON AN SQL STATEMENT WITH ATTRIBUTE CONCENTRATE STATEMENTS WITH ...

ABEND04E rc00e70005 at dsnxgrds dsnxob2 M105 in a prepared SQL statement with prepared attributes clause CONCENTRATE STATEMENTS 2017-01-03

22. PI71365: R-SCAN ACCESS PATH CHOSEN OVER MULTI-INDEX ACCESS FOR A TABLE WHICH QUALIFIES FOR NPGTHRSH BEHAVIOR

R-scan access path chosen over multi-index access for a table which qualifies for NPGTHRSH behavior. 2017-01-03

23. PI68896: INCORRECT FILTER FACTOR FOR PARTITION KEY OF VOLATILE TABLE

Db2 may calculate a incorrect filter factor for using BETWEEN and RANGE predicates, when a volatile table is defined as 2017-01-03

24. PI71415: POOR SQL PERFORMANCE WHEN THE ACCESS PATH USES NON-MATCHING INDEX FOR INDEX SKIPPING

Poor SQL Performance when the access path uses non-matching index for index skipping . 2017-01-03

25. PI63541: INEFFICIENT ACCESS PATH WITH EARLY OUT

Ineffecient access path can be chosen when the access plan qualifies for index skipping but no index covers the join 2017-01-03

26. PI68380: SUBOPTIMAL ACCESS PATH WITH A NESTED LOOP JOIN AND MULTI-INDEX ACCESS

A suboptimal access path is chosen when multi-index access is chosen as the inner table for a nested loop join. A hybrid join 2016-12-01

27. PI67499: INEFFICIENT NON-MATCHING INDEX SCAN IS SELECTED WHEN THERE ARE MORE THAN 1 TABLES IN THE QUERY BLOCK WITH ...

Inefficient non-matching index scan is selected when when the query contains DISTINCT in the query and there are more than 1 2016-12-01

28. PI70237: INEFFICIENT ACCESS PATH WHEN A QUERY HAS INSUBQ OR EXISTS-SUBQ AND THE SUBQUERY HAS A SINGLE TABLE

Inefficient access path when a query has INSUBQUERY or EXISTS-SUBQUERY and the subquery has a single table. Optimizer 2016-12-01

29. PI66248: INEFFICIENT ACCESS PATH WHEN NESTED LOOP JOIN USED TO ACCESS A INNER MATERIALIZED QUERY BLOCK

An inefficient rscan access path for the inner table of a nested loop join when the the inner table is a materialized workfile. 2016-12-01

30. PI64874: INDEX PROBING MAY ACCESS UNQUALIFIED PARTITION AT PREPARE TIME

Unqualified partitions are claimed by index probing at prepare time, that causes unnecessary locking issue and group buffer 2016-11-02

31. PI62713: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query with LEFT OUTER JOIN and ORDER BY and also running with Sort 2016-11-02

32. PI52204: INCORRECT DPSI LEAF PAGE ESTIMATION WHEN ALL THE PARTITION KEYS CONTAINS LOCAL PREDICATES

Inefficient access path is selected due to incorrect DPSI leaf page estimation when all the partition keys contains local 2016-10-03

33. PI64779: SELECT DISTINCT RETURNS DUPLICATE VALUES.

Incorrect output can occur when the DISTINCT keyword is used, matching index access is used on the IN-list, but the IN-list 2016-10-03

34. PI64234: INEFFICIENT ACCESS PATH INVOLVING RANGE LIST PREDICATE(S)

A Range list access path could be chosen when a more efficient access path is available. 2016-10-03

35. PI64089: PERFORMANCE IMPACT WITH CTE SELECTED FROM A VIEW

When a CTE is defined in a view, a sub-optimal access path may occur. A optimal access path will be estimated for the CTE, and 2016-10-03

36. PI61435: INCORRECT FILTER FACTOR MAY BE ASSIGNED FOR PAGE RANGE SCREENING PREDICATES WHEN PARTITION KEYS ARE DEFINED AS ...

Incorrect filter factor may be assigned for page range screening predicates when partition keys are defined as VARCHAR 2016-10-03

37. PI60761: Enable NPGTHRSH for qualified partitions of a partitioned table based on partition level NPAGES.

The code to enable NPGTHRSH for qualified partitions of a partitioned table based on partition level stats (NPAGES) 2016-09-02

38. PI61886: IN SUBQUERY FAILS TO MATCH ON INDEXABLE IN LIST COLUMN

For a query which contains an IN list on a subquery, Db2 currently will use the IN list column in an index match if the 2016-09-02

39. PI60056: INEFFICIENT ACCESS PATH WITH AGGREGATE FUNCTIONS SELECT(MAX) OR SELECT(MIN).

Less than optimal performance is the result when the optimizer makes a inefficient access path decision when the aggregate 2016-08-02

40. PI60333: UNNECESSARY SORT FOR ORDER BY WHEN DISTINCT/GROUP BY LIST COVERS A UNIQUE INDEX AND SELECTED INDEX SUPPORT ...

When a query contains an ORDER BY and DISTINCT/GROUP BY clause whose columns cover a unique index, Db2 selects an index with 2016-08-02

41. PI61893: INCORROUT WITH UPDATE STATEMENT AND CORRELATED SUBQUERY

A INCORROUT conditions occurs when a UPDATE statement is coded with a correlated subquery that contains a DISTINCT and FETCH 2016-08-02

42. PI60206: POOR SQL PERFORMANCE OF A QUERY THAT HAS A CORRELATED SUBQUERY COST REDUCTION

Poor SQL performance of a query that has a correlated subquery cost reduction . 2016-08-02

43. PI59348: INEFFICIENT INDEX SELECTION FOR THE INNER TABLE OF A JOIN

Less than optimal performance is the result when the optimizer makes a inefficient index selection for the inner table of a 2016-07-15

44. PI57513: GROUP BY/DISTINCT/MIN/MAX CLAUSES RESULT IN INCORRECT ACCESS PATH DUE TO INVALID COST REDUCTION

In V11, materialized work files which are used as the inner tables for left joins are being processed using a nested loop 2016-07-04

45. PI61155: INCORRECT COMPOUND FILTER FACTOR ESTIMATION FOR TWO RANGE PREDICATES

Inefficient index is selected due to incorrect compound filter factor estimation for two range predicates. 2016-07-04

46. PI50999: INCORRECT MATCHING FILTER FACTOR ESTIMATION FOR DPSI WHEN THE JOIN PREDICATES INVOLVE PARTITION KEY

Inefficient access path is used in a join or correlated subquery because IMFF of DPSI is not correctly estimated when the join 2016-06-02

47. PI58329: INCORRECT VALUE IN QW0022RX FIELD FOR IFCID022 WHEN UNDER REOPT(ONCE) OR REOPT(AUTO)

Incorrect value in QW0022RX field for IFCID022 when under REOPT ONCE OR REOPT AUTO . 2016-05-04

48. PI53774: INEFFICIENT ACCESS PATH CHOSEN WHEN Db2 INCORRECTLY ESTIMATES THE FILTERING WHEN COMBINING TWO RANGE ...

Inefficient access path may be chosen when Db2 incorrectly estimates the filtering when combining two range predicates into 2016-04-05

49. PI54988: OPTIMAL INDEX ACCESS MAY NOT BE USED WHEN STATISTICS IS NOT COLLECTED

Optimal index access may not be used when statistics is not collected. 2016-04-05

50. PI44963: INCORRECT COST ESTIMATION FOR I1 INDEX SCAN

Cost estimation in DSN_STATEMNT_TABLE is very high for one-fetch index scan(I1). Prefetch method is 'D' in the 2016-03-10

51. PI53790: INCORRECT ACCESS PATH CHOSEN FOR OPTIMIZE FOR 1 ROW

Db2 is producing an incorrect and inefficient access path in V11 when the OPTIMIZE FOR 1 ROW clause is added to a query which 2016-03-02

52. PI50063: AN INACCURATE COMPOUND FILTER FACTOR MAY BE PRODUCED FOR A PREDICATE WITH AN OR CLAUSE

AN INACCURATE COMPOUND FILTER FACTOR MAY BE PRODUCED FOR A PREDICATE WITH AN OR CLAUSE 2016-02-01

53. PI49507: DIFFERENT ACCESS PATH IS USED WHEN QUERY ON VIEW INSTEAD OF BASE TABLE.

A view is directly created on a base table. SQL statement on the view should be equivalent to the SQL on the base table. 2016-02-01

54. PI49557: INEFFICIENT ACCESS PATH FOR QUERY WITH FUNCTION MIN OR MAX BY INCORRECT REDUCTION FOR EARLY OUT

Inefficient access path for query with function MIN or MAX by incorrect reduction for early out 2016-01-04

Remove the filter for optimizer csects and you get 56:

1. PI79438: INEFFICIENT ACCESS PATH FOR QUERY WITH MIN/MAX AND NO GROUP BY

An inefficient access path can be chosen when a query contains a MIN/MAX function with no Group BY. 2017-10-02

2. PI84286: JOIN PREDICATE WITH CAST FUNCTION NON-INDEXABLE

A join predicate can become non-indexable if the predicate contains a decimal function without the length and scale 2017-10-02

3. PI83769: AN INEFFICIENT INDEX CAN BE SELECTED TO PARTICIPATE IN MULTI-INDEX ACCESS

AN INEFFICIENT INDEX CAN BE SELECTED TO PARTICIPATE IN MULTI-INDEX ACCESS WHEN THERE IS A HIGH DEGREE OF UNCERTAINTY 2017-09-26

4. PI86573: BETTER MATCHING INDEX NOT CHOSEN WHEN COMPETING INDEXES CONTAIN A SUBSET OF THE SAME COLUMNS.

A better matching index can be overlooked when the competing indexes share a set of the same columns. 2017-09-21

5. PI82772: INEFFICIENT ACCESS PATH IS USED BECAUSE OF AN INCORRECT COMPOUND FILTER FACTOR OF RANGE PREDICATES

The compound filter factor of two range predicates on the same column is underestimated, that causes an inefficient access 2017-09-02

6. PI82601: LESS FILTERING INDEX SELECTED FOR INNER TABLE OF NESTED LOOP JOIN

A smaller, less filtering index is selected for the inner table with a Nested Loop Join. 2017-09-02

7. PI78532: NON-MATCHING INDEX CHOSEN WHEN A MATCHING INDEX EXISTS 17/08/30 PTF PECHANGE

A non-matching index could be chosen when an index with good matching exists. 2017-08-30

8. PI63607: INEFFICIENT ACCESS PATH COULD OCCUR WITH CARTESIAN JOIN

An inefficient access path could occur when the result of a Cartesian join does not produce better index matching on the 2017-08-30

9. PI79775: INDEX PROBING NOT BEING INVOKED

Under certain conditions, index probing is not being utilized for predicates that calculate a FF with no matching rows 2017-08-02

10. PI80690: AE PI76369 FIX COMPLETION

AE PI76369 fix completion. 2017-07-05

11. PI76369: ABSTRACT:INEFFICIENT ACCESS PATH WITH SORT DISTINCT IN CTE

AN INEFFICIENT ACCESS PATH CAN BE CHOSEN WHEN A SORT FOR DISTINCT IS NEEDED INSIDE A CTE. 2017-06-05

12. PI75212: DURING AUTOBIND USING QUERYACCELERATION (ENABLE) BIND OPTION, STATIC QUERY IS BOUND FOR ACCELERATION ...

During AUTOBIND for a package bound QUERYACCELERATION(ENABLE), Db2 unexpectedly selects an IDAA access path and binds the query 2017-06-02

13. PI59793: UNDERESTIMATED TOTAL INDEX FILTERING WITH PAGE RANGE SCREENING WHEN THE PAGE RANGE COLUMNS ARE NOT IN THE INDEX

An inaccurate IMFFADJ value will be generated in instances where when page range screening is performed and the columns used for 2017-05-03

14. PI62376: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-04-11

15. PI75342: ACCESS PATH FOR A QUERY WITH GROUP BY AND ORDER BY CLAUSES INCLUDES AN UNNECESSARY SORT.

For the following query the access path includes a sort although it is not necessary: 2017-04-03

16. PI72177: INEFFICIENT ACCESS PATH IS SELECTED.

List prefetch plan with higher cost may be chosen mistakenly when there is one table in the query block and the index covers 2017-04-03

17. PI70394: ABSTRACT=INEFFICIENT ACCESS PATH WITH SINGLE VALUE PAGE RANGE PREDICATE.

An inefficient access path can be chosen when page range access is used for a join predicate and the columns has a cardinality 2017-03-09

18. PI73338: INEFFICIENT JOIN ACCESS ON PARTITIONED TABLE

Nested loop join may be chosen as the join type when hybrid join would likely have been a better choice. 2017-02-01

19. PI66289: INDEX WITH LESS MATCHING COLUMNS IS CHOSEN FOR INNER TABLE WHICH MAY CAUSE BAD PERFORMANCE

Index with less matching columns is chosen for inner table which may cause bad performance 2017-01-12

20. PI65041: SQLACCESSPATH OF THE ENTRY WITH LATEST TIMESTAMP AND ACCESSTYPE NR IS NOT SELECTED WHEN BIND PKG WITH OPTHINT ...

When BIND PACKAGE with OPTHINT and EXPLAIN(YES), the latest timestamp NR path will lost the chance to compete with other 2017-01-12

21. PI58411: INCORROUT CAN OCCUR FOR AN UPDATE QUERY USING TEMPORAL TABLES.

Temporal table has multiple indexes. One index to support the primary key constraint and one to support queries against the 2017-01-04

22. PI69685: ABEND04E RC00E70005 AT DSNXGRDS DSNXOB2 M105 ON AN SQL STATEMENT WITH ATTRIBUTE CONCENTRATE STATEMENTS WITH ...

ABEND04E rc00e70005 at dsnxgrds dsnxob2 M105 in a prepared SQL statement with prepared attributes clause CONCENTRATE STATEMENTS 2017-01-03

23. PI71365: R-SCAN ACCESS PATH CHOSEN OVER MULTI-INDEX ACCESS FOR A TABLE WHICH QUALIFIES FOR NPGTHRSH BEHAVIOR

R-scan access path chosen over multi-index access for a table which qualifies for NPGTHRSH behavior. 2017-01-03

24. PI68896: INCORRECT FILTER FACTOR FOR PARTITION KEY OF VOLATILE TABLE

Db2 may calculate a incorrect filter factor for using BETWEEN and RANGE predicates, when a volatile table is defined as 2017-01-03

25. PI71415: POOR SQL PERFORMANCE WHEN THE ACCESS PATH USES NON-MATCHING INDEX FOR INDEX SKIPPING

Poor SQL Performance when the access path uses non-matching index for index skipping . 2017-01-03

26. PI63541: INEFFICIENT ACCESS PATH WITH EARLY OUT

Ineffecient access path can be chosen when the access plan qualifies for index skipping but no index covers the join 2017-01-03

27. PI68380: SUBOPTIMAL ACCESS PATH WITH A NESTED LOOP JOIN AND MULTI-INDEX ACCESS

A suboptimal access path is chosen when multi-index access is chosen as the inner table for a nested loop join. A hybrid join 2016-12-01

28. PI67499: INEFFICIENT NON-MATCHING INDEX SCAN IS SELECTED WHEN THERE ARE MORE THAN 1 TABLES IN THE QUERY BLOCK WITH ...

Inefficient non-matching index scan is selected when when the query contains DISTINCT in the query and there are more than 1 2016-12-01

29. PI70237: INEFFICIENT ACCESS PATH WHEN A QUERY HAS INSUBQ OR EXISTS-SUBQ AND THE SUBQUERY HAS A SINGLE TABLE

Inefficient access path when a query has INSUBQUERY or EXISTS-SUBQUERY and the subquery has a single table. Optimizer 2016-12-01

30. PI66248: INEFFICIENT ACCESS PATH WHEN NESTED LOOP JOIN USED TO ACCESS A INNER MATERIALIZED QUERY BLOCK

An inefficient rscan access path for the inner table of a nested loop join when the the inner table is a materialized workfile. 2016-12-01

31. PI64874: INDEX PROBING MAY ACCESS UNQUALIFIED PARTITION AT PREPARE TIME

Unqualified partitions are claimed by index probing at prepare time, that causes unnecessary locking issue and group buffer 2016-11-02

32. PI62713: INCORROUT WITH SQLSTATE 01003 OCCURRED FOR A QUERY WITH LEFT OUTER JOIN AND ORDER BY AND ALSO RUNNING WITH ...

incorrout (wrong data) with SQLSTATE 01003 occurred for a query with LEFT OUTER JOIN and ORDER BY and also running with Sort 2016-11-02

33. PI52204: INCORRECT DPSI LEAF PAGE ESTIMATION WHEN ALL THE PARTITION KEYS CONTAINS LOCAL PREDICATES

Inefficient access path is selected due to incorrect DPSI leaf page estimation when all the partition keys contains local 2016-10-03

34. PI64234: INEFFICIENT ACCESS PATH INVOLVING RANGE LIST PREDICATE(S)

A Range list access path could be chosen when a more efficient access path is available. 2016-10-03

35. PI64779: SELECT DISTINCT RETURNS DUPLICATE VALUES.

Incorrect output can occur when the DISTINCT keyword is used, matching index access is used on the IN-list, but the IN-list 2016-10-03

36. PI64089: PERFORMANCE IMPACT WITH CTE SELECTED FROM A VIEW

When a CTE is defined in a view, a sub-optimal access path may occur. A optimal access path will be estimated for the CTE, and 2016-10-03

37. PI61435: INCORRECT FILTER FACTOR MAY BE ASSIGNED FOR PAGE RANGE SCREENING PREDICATES WHEN PARTITION KEYS ARE DEFINED AS ...

Incorrect filter factor may be assigned for page range screening predicates when partition keys are defined as VARCHAR 2016-10-03

38. PI60761: Enable NPGTHRSH for qualified partitions of a partitioned table based on partition level NPAGES.

The code to enable NPGTHRSH for qualified partitions of a partitioned table based on partition level stats (NPAGES) 2016-09-02

39. PI61886: IN SUBQUERY FAILS TO MATCH ON INDEXABLE IN LIST COLUMN

For a query which contains an IN list on a subquery, Db2 currently will use the IN list column in an index match if the 2016-09-02

40. PI60056: INEFFICIENT ACCESS PATH WITH AGGREGATE FUNCTIONS SELECT(MAX) OR SELECT(MIN).

Less than optimal performance is the result when the optimizer makes a inefficient access path decision when the aggregate 2016-08-02

41. PI60333: UNNECESSARY SORT FOR ORDER BY WHEN DISTINCT/GROUP BY LIST COVERS A UNIQUE INDEX AND SELECTED INDEX SUPPORT ...

When a query contains an ORDER BY and DISTINCT/GROUP BY clause whose columns cover a unique index, Db2 selects an index with 2016-08-02

42. PI61893: INCORROUT WITH UPDATE STATEMENT AND CORRELATED SUBQUERY

A INCORROUT conditions occurs when a UPDATE statement is coded with a correlated subquery that contains a DISTINCT and FETCH 2016-08-02

43. PI60206: POOR SQL PERFORMANCE OF A QUERY THAT HAS A CORRELATED SUBQUERY COST REDUCTION

Poor SQL performance of a query that has a correlated subquery cost reduction . 2016-08-02

44. PI59348: INEFFICIENT INDEX SELECTION FOR THE INNER TABLE OF A JOIN

Less than optimal performance is the result when the optimizer makes a inefficient index selection for the inner table of a 2016-07-15

45. PI54868: PERFORMANCE DEGRADATION WITH PAGE RANGE FILTERING ON A JOIN PREDICATE AND NO LOCAL PREDICATES

Db2 is not estimating the correct index filtering when page range filtering is available on a join predicate and no local 2016-07-12

46. PI57513: GROUP BY/DISTINCT/MIN/MAX CLAUSES RESULT IN INCORRECT ACCESS PATH DUE TO INVALID COST REDUCTION

In V11, materialized work files which are used as the inner tables for left joins are being processed using a nested loop 2016-07-04

47. PI61155: INCORRECT COMPOUND FILTER FACTOR ESTIMATION FOR TWO RANGE PREDICATES

Inefficient index is selected due to incorrect compound filter factor estimation for two range predicates. 2016-07-04

48. PI50999: INCORRECT MATCHING FILTER FACTOR ESTIMATION FOR DPSI WHEN THE JOIN PREDICATES INVOLVE PARTITION KEY

Inefficient access path is used in a join or correlated subquery because IMFF of DPSI is not correctly estimated when the join 2016-06-02

49. PI58329: INCORRECT VALUE IN QW0022RX FIELD FOR IFCID022 WHEN UNDER REOPT(ONCE) OR REOPT(AUTO)

Incorrect value in QW0022RX field for IFCID022 when under REOPT ONCE OR REOPT AUTO . 2016-05-04

50. PI53774: INEFFICIENT ACCESS PATH CHOSEN WHEN Db2 INCORRECTLY ESTIMATES THE FILTERING WHEN COMBINING TWO RANGE ...

Inefficient access path may be chosen when Db2 incorrectly estimates the filtering when combining two range predicates into 2016-04-05

51. PI54988: OPTIMAL INDEX ACCESS MAY NOT BE USED WHEN STATISTICS IS NOT COLLECTED

Optimal index access may not be used when statistics is not collected. 2016-04-05

52. PI44963: INCORRECT COST ESTIMATION FOR I1 INDEX SCAN

Cost estimation in DSN_STATEMNT_TABLE is very high for one-fetch index scan(I1). Prefetch method is 'D' in the 2016-03-10

53. PI53790: INCORRECT ACCESS PATH CHOSEN FOR OPTIMIZE FOR 1 ROW

Db2 is producing an incorrect and inefficient access path in V11 when the OPTIMIZE FOR 1 ROW clause is added to a query which 2016-03-02

54. PI50063: AN INACCURATE COMPOUND FILTER FACTOR MAY BE PRODUCED FOR A PREDICATE WITH AN OR CLAUSE

AN INACCURATE COMPOUND FILTER FACTOR MAY BE PRODUCED FOR A PREDICATE WITH AN OR CLAUSE 2016-02-01

55. PI49507: DIFFERENT ACCESS PATH IS USED WHEN QUERY ON VIEW INSTEAD OF BASE TABLE.

A view is directly created on a base table. SQL statement on the view should be equivalent to the SQL on the base table. 2016-02-01

56. PI49557: INEFFICIENT ACCESS PATH FOR QUERY WITH FUNCTION MIN OR MAX BY INCORRECT REDUCTION FOR EARLY OUT

Inefficient access path for query with function MIN or MAX by incorrect reduction for early out 2016-01-04

So we are still talking about just over two per month…

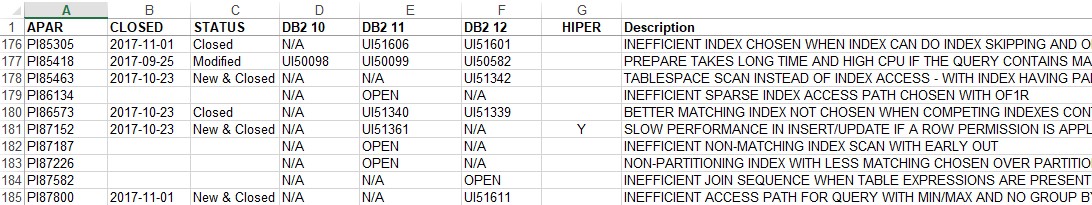

Db2 10 APAR Review sqlaccesspath

Just drop the B10 and add A10 to the search and do it all again.

1. PI49116: TOTALENTRIES TRUNCATED IN RTS FOR LARGE LOAD RESULTS IN REBUILD INDEX FAILING

2.6 billion row table load is truncating totalentries in RTS. 2016-12-01

2. PI66401: POOR PERF FOR XMLTABLE FUNCTION WITH PREDICATE BEING PUSHED INSIDE XPATH PRODUCES LOOSE HIGH KEY VALUE FOR ...

The XMLTABLE function is producing a loose high key value for MSIKEYP2 when searching for a specific docid. 2016-12-01

3. PI15740: INCORROUT DUPLICATED RECORDS RETURNED FOR QUERY WITH SQLACCESSPATH OF RANGELIST

The problem can happen when 1) range list access is used, 2016-01-30

Again, remove the yesHIPER and now there are 16 APARs

1. PI85418: PREPARE TAKES LONG TIME AND HIGH CPU IF THE QUERY CONTAINS MANY OR PREDICATES WHICH MAY QUALIFY RANGE LIST ...

A complex query contains many OR predicates that potentially qualifies range list access(ACCESSTYPE=NR), the prepare of the 2017-09-25

2. PI76372: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-05-01

3. PI49116: TOTALENTRIES TRUNCATED IN RTS FOR LARGE LOAD RESULTS IN REBUILD INDEX FAILING

2.6 billion row table load is truncating totalentries in RTS. 2016-12-01

4. PI66135: INEFFICIENT INDEX MAY BE SELECTED

An inefficient index may be chosen by the optimizer when certain levels of uncertainly exist on some predicates. 2016-12-01

5. PI66401: POOR PERF FOR XMLTABLE FUNCTION WITH PREDICATE BEING PUSHED INSIDE XPATH PRODUCES LOOSE HIGH KEY VALUE FOR ...

The XMLTABLE function is producing a loose high key value for MSIKEYP2 when searching for a specific docid. 2016-12-01

6. PI58274: Inefficient access path for a query with OPTIMIZE FOR 1 ROW or FETCH FIRST 1 ROW when an inner index matches ...

When the outer composite is guaranteed to be a single row and OPTIMIZE FOR 1 ROW or FETCH FIRST 1 ROW is specified, Db2 is not 2016-07-04

7. PI59200: AN INDEX WITH LESS MATCHING COLUMN IS USED WHEN QUERY CONTAINS IN LIST PREDICATE

Optimal index may not be used for below query, SELECT * FROM TB1 2016-07-04

8. PI39053: CLAIM ACQUIRE FOR SET STATEMENTS AGAINST SYSTSTAB RESULTS IN DSNT501I RC00C200EA DURING CATALOG REORG

Claim acquire for SET statements against SYSTSTAB results in DSNT501I RC00C200EA during Db2 CATALOG REORG 2016-05-19

9. PI54892: DIFFERENT ACCESS PATH IS USED DUE TO A TIMING ISSUE IN OPTIMIZER

Different access path is used due to a timing issue in optimizer. 2016-05-04

10. PI53633: PERFORMANCE IMPACT WHEN DSNZPARM MAXRBLK IS SET GREATER THAN ACCEPTABLE VALUE

When MAXRBLK is set to a value of 16777216 or higher, the run time evaluation will be incorrect with the threshold 2016-05-04

11. PI57655: DIRECT ROW ACCESS IS DEFEATED WHEN THE QUERY HAS FFNR

Poor performance due to Direct Row Access through ROWID column being degraded to index/tablespace scan when the query has a 2016-05-04

12. PI54978: MERGE STATEMENT USES DEFAULT TABLE CARDINALITY WHEN CARDINALITY IS PROVIDED VIA THE "FOR N ROWS" CLAUSE

The merge statement uses the default table cardinality of 10000 when the cardinality is provided via the "FOR n ROWS" clause. 2016-05-04

13. PI56300: POOR BIND PERFORMANCE MAY OCCUR DUE TO UNNECESSARY ACCESS TO PLAN_TABLE WHEN SET STATEMENT IS APPLIED

Poor bind performance may occur due to unnecessary access to PLAN_TABLE when SET statement is applied. 2016-04-05

14. PI49018: ACCESS PATH ENHANCEMENT FOR A QUERY CONTAINING A JOIN PREDICATE ON A TABLE WITH DEFAULT COLUMN CARDINALITY ...

Db2 recognizes cases in which an ordered outer table can provide benefit on access to the inner table. When the outer table is 2016-03-02

15. PI53169: POOR SQL PERFORMANCE WHEN RANGE LIST ACCESS IS USED AND PTF UI22717 IS APPLIED 15/11/25 PTF PECHANGE

Poor SQL performance when range list access is used and PTF UI22717 is applied . 2016-03-02

16. PI15740: INCORROUT DUPLICATED RECORDS RETURNED FOR QUERY WITH SQLACCESSPATH OF RANGELIST

The problem can happen when 1) range list access is used, 2016-01-30

Remove the filter for optimizer csects and you get 17:

1. PI85418: PREPARE TAKES LONG TIME AND HIGH CPU IF THE QUERY CONTAINS MANY OR PREDICATES WHICH MAY QUALIFY RANGE LIST ...

A complex query contains many OR predicates that potentially qualifies range list access(ACCESSTYPE=NR), the prepare of the 2017-09-25

2. PI76372: INEFFICIENT ACCESS PATH CHOSEN WHEN NO MATCHING INDEX EXISTS TO SATISFY THE JOIN PREDICATE.

An inefficient access path can be selected for a query with no matching index to cover the join predicate. 2017-05-01

3. PI71693: REMOTE CONNECTION ATTEMPT RESULTS IN NONMATCHING INDEX SCAN OF DSNFEX01 WHEN ROW FOR AUTHID IS MISSING FROM ...

Db2DDF See APAR PI76121 for Db2 12 for z/OS. 2017-03-02

4. PI49116: TOTALENTRIES TRUNCATED IN RTS FOR LARGE LOAD RESULTS IN REBUILD INDEX FAILING

2.6 billion row table load is truncating totalentries in RTS. 2016-12-01

5. PI66135: INEFFICIENT INDEX MAY BE SELECTED

An inefficient index may be chosen by the optimizer when certain levels of uncertainly exist on some predicates. 2016-12-01

6. PI66401: POOR PERF FOR XMLTABLE FUNCTION WITH PREDICATE BEING PUSHED INSIDE XPATH PRODUCES LOOSE HIGH KEY VALUE FOR ...

The XMLTABLE function is producing a loose high key value for MSIKEYP2 when searching for a specific docid. 2016-12-01

7. PI58274: Inefficient access path for a query with OPTIMIZE FOR 1 ROW or FETCH FIRST 1 ROW when an inner index matches ...

When the outer composite is guaranteed to be a single row and OPTIMIZE FOR 1 ROW or FETCH FIRST 1 ROW is specified, Db2 is not 2016-07-04

8. PI59200: AN INDEX WITH LESS MATCHING COLUMN IS USED WHEN QUERY CONTAINS IN LIST PREDICATE

Optimal index may not be used for below query, SELECT * FROM TB1 2016-07-04

9. PI39053: CLAIM ACQUIRE FOR SET STATEMENTS AGAINST SYSTSTAB RESULTS IN DSNT501I RC00C200EA DURING CATALOG REORG

Claim acquire for SET statements against SYSTSTAB results in DSNT501I RC00C200EA during Db2 CATALOG REORG 2016-05-19

10. PI54892: DIFFERENT ACCESS PATH IS USED DUE TO A TIMING ISSUE IN OPTIMIZER

Different access path is used due to a timing issue in optimizer. 2016-05-04

11. PI53633: PERFORMANCE IMPACT WHEN DSNZPARM MAXRBLK IS SET GREATER THAN ACCEPTABLE VALUE

When MAXRBLK is set to a value of 16777216 or higher, the run time evaluation will be incorrect with the threshold 2016-05-04

12. PI57655: DIRECT ROW ACCESS IS DEFEATED WHEN THE QUERY HAS FFNR

Poor performance due to Direct Row Access through ROWID column being degraded to index/tablespace scan when the query has a 2016-05-04

13. PI54978: MERGE STATEMENT USES DEFAULT TABLE CARDINALITY WHEN CARDINALITY IS PROVIDED VIA THE "FOR N ROWS" CLAUSE

The merge statement uses the default table cardinality of 10000 when the cardinality is provided via the "FOR n ROWS" clause. 2016-05-04

14. PI56300: POOR BIND PERFORMANCE MAY OCCUR DUE TO UNNECESSARY ACCESS TO PLAN_TABLE WHEN SET STATEMENT IS APPLIED

Poor bind performance may occur due to unnecessary access to PLAN_TABLE when SET statement is applied. 2016-04-05

15. PI49018: ACCESS PATH ENHANCEMENT FOR A QUERY CONTAINING A JOIN PREDICATE ON A TABLE WITH DEFAULT COLUMN CARDINALITY ...

Db2 recognizes cases in which an ordered outer table can provide benefit on access to the inner table. When the outer table is 2016-03-02

16. PI53169: POOR SQL PERFORMANCE WHEN RANGE LIST ACCESS IS USED AND PTF UI22717 IS APPLIED 15/11/25 PTF PECHANGE

Poor SQL performance when range list access is used and PTF UI22717 is applied . 2016-03-02

17. PI15740: INCORROUT DUPLICATED RECORDS RETURNED FOR QUERY WITH SQLACCESSPATH OF RANGELIST

The problem can happen when 1) range list access is used, 2016-01-30

So we are still talking about just over one per month, which implies that Db2 10 was “more stable” in this area. To be fair, Db2 10 is a lot older (GA date 2010-10-22) than 11 and 12 so I am not surprised that after seven years the bug rate is dropping off!

What does all this mean?

What it means to me, is that just looking at sqlaccesspath shows a pretty high turnover rate that must be tested. There are HIPERs in here that could really hurt, and so applying maintenance, especially now in the Agile Db2 12 world, becomes even more critical to your business.

Are you ready for this?

More about : Db2 12 SQL access paths; Agile, SQL Codes/Catalog/Function Levels

See also our CDDC tool suite: Continuous Delivery – Deployment Check

CDDC Supports fully automated testing of the new Db2 agile delivery:

– BIF/ICI Detection: Checks incompatibilities on FUNCTION LEVEL

– Access Path PreCheck

– Creates quality environments from a production clone

– Capture the entire workload incl. DCL, DDL, commands…

As usual, if you have any comments or queries please feel free to drop me a line!

TTFN

Roy Boxwell